Deep Learning (Python implementation of Neuron from Scratch)

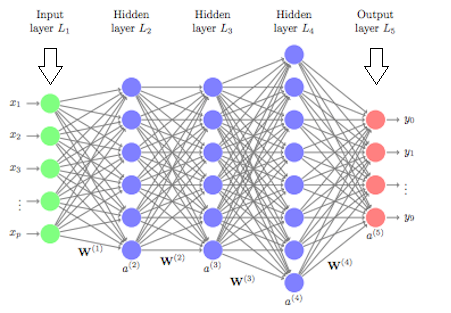

Deep Learning is a sub-field of Machine Learning concerned with algorithms inspired by the structure and function of the Human Brain called Artificial Neural Networks(ANN).

It makes the computation of multi-layer neural network feasible.

It’s based on Human Brain Concept and Neurons of Human Brain.

Rosenblatt’s Single Layer Protection(1957).

An example of a neuron showing the input (x1-x2). Their corresponding weights(w1-w2), a bias (b) and the activation function (f) applied to the weighted sum of inputs.

x1*w1 + x2*w2 +b

This is difference Deep Learning vs Old Machine Learning.

Plot of Performance vs Amount of Data. Old Machine Learning has it’s limit after reaching a threshold while Deep Learning keep their performance increasing.

import tensorflow as tf

import keras

These are the two basic libraries “Tensorflow” and “keras” by Google which will be used in Deep Learning and there is also a library named “Pytorch” by Facebook. Now Google updated it’s library and converted both into one as “Tensorflow+keras” named “Tensorflow”.

Now We will implement Artificial Neuron Network as Logic Gates with Python.

We will implement

#MCneuron for AND Gate.

As we will see what happens from the very basic.

import numpy as np

from random import choice

import basic libraries of python

w = [1,1]

training_data = [

(np.array([0,0]), 0),

(np.array([0,1]), 0),

(np.array([1,0]), 0),

(np.array([1,1]), 1),

]

Define DataSet on which we will work.

Now, We will define an activation function with basic AND Gate concept.

def Activation_Fun(x):

if x < 2:

return 0

else:

return 1

Now, We will train our DataSet for Artificial Neural Network and Work on the Principle of AND Gate.

for x,y in training_data:

result = np.dot(x,w) #1 Part

print(x, “ — -> “, Activation_Fun(result)) #2 Part

After Running the above code we will get an Output like:

[0 0] ---> 0

[0 1] ---> 0

[1 0] ---> 0

[1 1] ---> 1Hence, We successfully trained our Data on AND Gate.

Rosenblatt’s single layer Perceptron

(adjust a weight and find best of them)

training_data = [

(np.array([0,0]), 0),

(np.array([0,1]), 1),

(np.array([1,0]), 1),

(np.array([1,1]), 1),

]

We will read the dataset as before.

n = training_data[0][0].shape[0] #To know size of data

w = np.zeros(n+1) #for fixed weightage

errors = [] #To store the errors

epochs = 1000 #Number of Iterations

lr = 0.1 #Learning Rate of the model

Now, We defined some variables n(size of data), w(for fixed weightage), errors(To store errors), epochs(Number of iterations) and lr(Learning Rate of the model as we want)

def Activation_Fun(x):

if x >= 0:

return 1

else:

return 0def Sum(x):

s = np.dot(w,x)

return s

Now we defined Activation Function as we defined in above example with the concept of “OR Gate”. and Sum to keep track of values.

for i in range(epochs):

for x,y in training_data:

x = np.insert(x, 0, 1)

z = Sum(x)

a = Activation_Fun(z)

e = y — a #error

w = w + (lr*e*x) #to find weight

w[0] = -1

Here, we are working on our model with the help of Dataset, first we are declaring variables then we find Error as “e” then we find w as the formula and stored it.

for x,y in training_data:

x1 = np.insert(x, 0, 1)

result = np.dot(x1,w)

print(“{}, {} — -> {}”.format(x, result, Activation_Fun(result)))np.round(w)

Now, We are training our model to work on the Values we found from our Dataset and at last we are printing the result.

Which would be something like this:

[0 0], -1.0 ---> 0

[0 1], 0.09999999999999987 ---> 1

[1 0], 0.09999999999999987 ---> 1

[1 1], 1.1999999999999997 ---> 1Out[7]:

array([-1., 1., 1.])Which gives us Values as -1,1 and 1.

That’s the completion of basic model of neuron in Deep Learning.

If you find it useful kindly share and give feedback or ask queries in comment section. Thank you.